|

Every day, virtual tons of email messages make their way to our office inbox, only to be fired back to their sources with snappy answers or incinerated in the imaginary furnaces of our junk bins. No, we don’t need any v1agacara. Yes, tell us more about Russian mail order brides. No, Halo will never come out for the Wii. Thank you for writing Game Revolution. We pride ourselves on answering just about every bizarre question that floats our way, but one in particular has us stymied. It goes something like this: “Why does Metacritic say you gave Bonker Jerks a 67 out of 100, when you gave it a B-?”

Whoops. What was unwittingly asked as a single, innocent query turned into a Russian Doll of infuriating mysteries, crazy policies and really bad math, one we’re forced to pay for, but certainly didn’t order. Where’s a vic0dine when you need one? Not here. Instead of avoiding the pain, we’ve decided to bring it in Mind over Meta, our totally unofficial and thoroughly unscientific study of the science behind metareview sites. We spent the last few weeks thumbing through the three biggest – Rottentomatoes, Metacritic and Gamerankings – in an effort to get to the bottom of the grading issue and to figure out exactly how these increasingly popular tools work. Chances are you've used one of these over the past couple years, but for the uninitiated, metareview sites take all the reviews for a certain game, convert the grades into a 0-100 scale and average them together for an aggregate score. Ideally, this serves two functions: to show you what games might be worth checking out, and to provide a handy link hub for those unwilling to sift through countless gaming websites. Except they’re all broken. And occasionally, rotten.

|

|

RottenTomatoes.com Before we began our inquisition, RottenTomatoes was our favorite aggregate site on the Internet, mainly for their old-school cred and the way they handle movie reviews. Thanks to their simple Fresh or Rotten rating, you could tell at a glance if the film was critically lauded or lambasted. So we were shocked when we discovered that their games section works about as well as a Uwe Boll trailer. Their biggest problem is that they consider any grade under 80% to be Rotten. According to the source:

“Although most publishers rate games on a 1-10 scale, it is a rarity for a game to get a score below 6. Because game reviews are mostly positive (a very high majority fall in the 7-10 range), the cutoff for a Fresh Tomato is raised to 8/10. This higher cutoff actually produces a wider spread of Tomatometer scores that is equivalent to movies; otherwise, almost all games are recommended!”

In other words, because the video game industry has so much trouble criticizing games, RottenTomatoes is going to do it for them. This completely defeats the purpose of a metareview site. If a critic gives a game a 7.9 – which any sort of math in any sort of country equates to at least “decent” – and RottenTomatoes converts that score to a Rotten rating, they are misleading consumers and misrepresenting their source. By manipulating the hard data to conform to their crazy theory, they are, in effect, metatorializing. Someone please add that to Merriam-Webster. “In order for a movie to receive an overall rating of FRESH, the reading on the Tomatometer for that movie must be at least 60%. Otherwise, it is ROTTEN. Why 60%? We feel that 60% is a comfortable minimum for a movie to be recommended.” (They’re talking movies here, but this cutoff applies to every medium RottenTomatoes covers.) Let’s take a look at how this scheme affects an actual game, such as Peter Jackson’s King Kong for the Xbox 360. King Kong is considered Rotten even though four of the seven reviews are Fresh. You might also notice that one of the reviews doesn’t seem to be registering. However, since that reviewer gave the game a 7.9, his score would still be Rotten, and so would the game’s aggregate score.

How screwy does it get? Well, consider that The Godfather for the Xbox is 100% Fresh, while the PS2 version barely keeps its spot in the fridge with a 67. Meanwhile, Battlefield 2: Modern Combat 360 is downright Rotten (50%), which is nothing compared to the stench of Odama (which only managed to score a 0%. Even we’re not that mean.) Part of the reason RottenTomatoes is so broken – aside from their stupid scoring conversion – is that they only use submitted reviews. Most RottenTomatoes scores are only based on the opinions of four or five reviewers, so you aren’t really getting an accurate look at the majority of the industry, but rather a completely random sampling of whoever happened to fill out the right forms. And more often than not, you aren’t getting any sort of look at all, because many games don’t have the minimum number of reviews to warrant an aggregate score (five). If you look at the PS2 Racing section for the past 12 months, for instance, you’ll see that out of twenty-nine entries, only four games have tomatoes. Ironically, none of those feature reviews from IGN, and IGN owns RottenTomatoes. Time to sell! For all this, we think RottenTomatoes.com deserves an 89 out of 100. Unfortunately, our cutoff for a Fresh is 90, making their site certifiably Rotten. What can we say? In California, we have high standards for our produce. And our critics.

|

|

Metacritic.com It turns out most of the emails complaining about a GR score on a meta review site have something to do with Metacritic.com. For some reason, they skew our grades preposterously low, beyond the realm of any normal human calculus. Instead, you need drunken calculus, and we have that in spades. So we took Metacritic to task, first skimming through their online FAQ. Which, alas, is FUCed…and they can prove it.

While this system is obviously loony, we think we see where they went wrong, and so can you because it's plain as day in their very own aggregate breakdown chart. It seems Metacritic uses two standards: one for games, and one for everything else. But there is only one letter grade-to-number conversion scale, and it doesn’t match either breakdown. It represents a third standard, one of stark, raving madness. And since no one wants to be crazy, they cover it up by not showing original grades on their score pages. Why? Who knows, but readers look at these pages and think we’re irresponsible critics, when in fact Metacritic is loco. We give Metacritic’s funhouse mirror conversion scheme an F+. Or maybe an E- for lack of effort, because we know they aren’t trying to make us look like jerks. We suppose we should be consoled by the fact that Play magazine and Gameshark get the same treatment. Then again, those pubs rarely give grades below A’s, so the wacky conversion doesn’t affect them as much as it does cranky old GR. Jerks. Now, you’d think one screwy grade wouldn’t matter on an aggregate site; after all, it’s just a drop in the bucket right? Tell that to irritable Stardock chief Brad Wardell, who went ballistic over our review of Galactic Civilizations II. Though we don't often air our dirty laundry, we just had to swipe this snippet from Brad’s email barrage: “…your review isn't just the lowest review of the game but REALLY far down. As you point out, on Metacritic, it's a 67. The next lowest review is 80.” We gave Galactic Civilizations II a B-. According to our own standard (the same one used by America's public education system), a B- converts to – you guessed it – an 80. We should have been right there next to the other low grade, not 13 points below it. Brad claims, as a result, that : "[Game Revolution’s] current score ensures we'll never break the overall 9.0 average on there. That may sound silly, but for us, it's important to us because so much of our lives have been wrapped up making the game.” Far be it from us to point out that our lives are wrapped up in reviewing games, not fixing Metacritic (current article notwithstanding).

But maybe Brad is right. Maybe our score did screw his game out of a 9.0 average. We’ll never know for sure because Metacritic individually weighs its sources, meaning they decide how much or how little each site influences the aggregate score. What's the criteria for this forbidden formula? Who knows? It's their secret sauce and they keep it under tight wraps; there’s just no telling what their aggregate scores are actually based on. As a result, we can only speculate as to whether or not scores are aggregated from more than a handful of heavily-weighted sites. Don't believe us? According to Marc Doyle, a Metacritic founder: “We don’t think that a review from, say, Roger Ebert or Game Informer, for example, should be given the same weight as a review from a small regional newspaper or a brand new game critic.” Yes, he did just bring up Roger “Games Aren’t Art” Ebert and Game “GameStop” Informer in the same sentence as examples of important, weighty critics, who by virtue of their very rightness make smaller, newer critical entities less correct. We get the idea, but oh, the wrongness. Metacritic’s desire to base their aggregate scores on their own interpretation of Who’s Who in the video game industry completely undermines all the hard work they do to provide you with an otherwise great service. Even though they claim to be an aggregate site and have a list of accepted publications a mile long, it’s entirely possible that only ten of them count. You have no way of knowing with Metacritic. They’re asking you to trust their judgment while simultaneously asking you to believe their inane science. The kookiest part, though, is that it sort-of works, and their aggregate scores are usually in line with what seems to be the critical consensus for any given game. You can look at Metacritic and reliably see how well a game has been received. They even color code their scores and include blurbs from each review so you can get an idea of the tone and focus without having to go anywhere. Yet they break our grades and their own, then cover their shoddy conversion by omitting the actual review scores and concealing the scheme they use to weigh their sources. Because they obviously graduated from the Underpants Gnome School of Business (Underpants + ??? = Profit!), we give them a final grade of Two Underpants. Two Underpants out of how many, you ask? Sorry, that’s our Secret Underpants Formula.

|

|

GameRankings.com By now you’re probably thinking metareview sites can’t get much worse, and you’re right. Gamerankings is functionally better than either Metacritic or RottenTomatoes. They automatically track tons of sites, they’re completely transparent with their results, and their averages actually are pretty good indications of whether or not you should research a game further. Sometimes. Then again, sometimes they post scores for imports and don’t tell you the game you’re looking at is only available in Japan. And since their aggregates aren’t based on a minimum number of reviews, sometimes their aggregates are really just one review. They used to count Ziff-Davis reviews twice, and at this very moment they’re counting a Gamespot review that doesn’t even exist. Uh oh.

So, we used a site known as Alexa.com to sort the reviews for various games into two groups: reputable and not reputable. Alexa ranks every site on the Internet based in large part on the propagation of its nasty toolbar. According to Alexa, Gamespot is ranked around 200, while Game Informer is at 37,000 (Right now, GR is at 12,000) It’s certainly not precise, sort of a wonky Nielsen rating, but we felt comfortable doing this because Gamerankings’ own Site Statistics page has a defunct Alexa field. We thought this especially telling, and decided to fix it for them, GR style. Using an Alexa ranking of 40,000th as our cutoff, we split the review sources for several games and averaged the scores. What we found shocked us. If those below 40,000 and those above didn’t entirely agree, they were always within one or two points. In fact, the less reputable group was frequently more conservative with its grades, serving to slightly check pubs like Game Informer, GameSpy and Play, all of whom love to dish out 100s. To our relative surprise, Gamerankings’ aggregate scores illustrate consensus among critics great and small. Remember, though, that this is their job. In spite of the fact that they’re better at what they do than Metacritic or RottenTomatoes, their success seems to be less the product of virtue than vice. Whoever wrote their Help section included this little gem of an example: “I used to include scores from Gamers.com in the average ratios, however, since their reviews come from Ziff-Davis magazines, it was including the same score twice. My Answer to people that used to complain about it was simple, you get a big magazine and a big website and your opinion can count twice too.” So wait, if we ran the same review score on Game Revolution and, say, Game Evolution, Game Devolution and Game Helluvalution, it would count four times? Awesome. Indeed, Gamerankings displays a bias toward big publications even as their very own results prove them wrong. We should note that they eventually stopped counting Ziff-Davis reviews twice, albeit gracelessly: “I finally gave in and list(ed) Gamers.com under other reviews and only use the score from the print version. Oh and the day I did that, I started getting all kinds of emails for not counting them. Any change = 1/3 Happy, 1/3 Unhappy, 1/3 Never Notice.”

The reason Gamespot pulled their LocoRoco review was because it's based on an imported copy of the game. Since their site reviews North American releases, and the North American version is due in September (and under embargo, we might add), they decided to wait and “see if any content has been added/changed/removed and so on and so forth,” as Gamespot’s Alex Navarro told Joystiq.com. Good on you, Alex. Gamerankings, on the other hand, makes no such distinctions. As of 8/4/06, every review in their LocoRoco list, aside from the one that doesn’t exist, is for either a UK or Japanese version. However, both are included in the same aggregate, in spite of the fact that one is in Japanese. When the North American version ships, it will presumably be lumped in with the UK and Japanese versions as though they were all North American reviews of North American games. Before you burn the flag and protest, know that this isn’t about patriotism – it’s about the fact that different nations have different cultural standards. In Germany, for example, games that include either blood or Nazis are banned. Publishers know this and they make changes to their products accordingly. Gamerankings, on the other hand, doesn’t even seem to know what the word “standard” means. If they did, their minimum number of reviews for an aggregate score might be higher than one. Behold. We aren’t fond of the way they break down letter grades, either, although this is admittedly the least of their troubles. Basically, they get A’s and B’s right, and then they skew C’s and D’s too high. Their system is arbitrary and a little retarded, and so is their defense of it as seen on their Help page: “I stand by my system, trust me, I have put more thought and tried more things than any of you ever will, and I feel this is the best way to reflect the scores.” Evidently, the only thing Gamerankings’ founders haven’t tried is high school English. Okay, that’s harsh, but so is skewing our grades and featuring imaginary reviews of foreign video games. They’re the best aggregate site of the lot for their transparency and breadth of sources, yet occasionally they completely misrepresent games’ critical responses due to their lack of a review limit and their inability to tell an import from a final release. For that we give them an aggregate score of 50 out of 100. Even though that’s just our opinion, we want you to know it’s shared by everybody in North America, Marrakesh and Burma.

|

|

So it’s safe to say these sites have some work to do, but that isn’t stopping the industry from taking them pretty seriously. At EA’s recent unveiling of the incredible Valve Portal system, Valve overlord Gabe Newell noted the success of Half-Life 2: Episode 1 by using its Metacritic score. That’s unsurprising considering the company's Steam service has Metacritic scores embedded right into its download manager. We’ve seen Gamerankings ratings listed on promotional websites time and again. And then there was our own unfortunate experience with Galactic Civ II.

We agree, although we'd take issue with the "good" part. Gamerankings provides the bare minimum with its basic functionality and transparency. Metacritic also fulfills the basic functions of a meta site, except that they come to conclusions for you instead of giving you the tools you need to draw your own. And RottenTomatoes…well, we’d like to pretend they don’t matter, but then we Google our own names. Sigh. And we're happy to remove the chip from our shoulder so long as users, reviewers and industry types understand that current metareview sites are about as scientifically accurate as Back to the Future. The inherent concept is great, but until someone decides to fix the rampant metatorializing, bizarre math and questionable standards, it's truly anyone's game. Oh, and in case you were wondering, by averaging Rotten, Two Underpants and 50, our aggregate score for all three metareview sites is YELLOW.

|

It’s a good question and it deserves a good answer, so we donned our white lab suits to make us look like scientists and starting hacking away at the problem.

It’s a good question and it deserves a good answer, so we donned our white lab suits to make us look like scientists and starting hacking away at the problem.

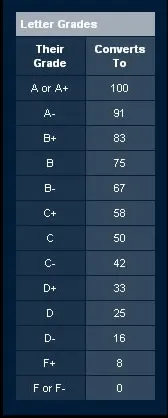

Metacritic’s letter grade-to-number conversion scale is insane. It starts off okay with the A’s all landing in the 91-100 range – a respectable 10 point spread – but things quickly go bonkers when you give a game a B+, which, according to Metacritic, converts to an 83. What happened to 84-90? Apparently those digits are being used to prop up the rest of the B's, which run from 83 (ugh) to 67 (double ugh). That’s a 19 point range, holmes, for three grades. The C’s suddenly dip an extra 9 points, starting at a 58 for the C+ and ending with a C- at 42. That might be the answer to life itself, but it only made us more confused. And it just gets messier as you plow down towards the F, which they laughably break down into an F+, F and F-.

Metacritic’s letter grade-to-number conversion scale is insane. It starts off okay with the A’s all landing in the 91-100 range – a respectable 10 point spread – but things quickly go bonkers when you give a game a B+, which, according to Metacritic, converts to an 83. What happened to 84-90? Apparently those digits are being used to prop up the rest of the B's, which run from 83 (ugh) to 67 (double ugh). That’s a 19 point range, holmes, for three grades. The C’s suddenly dip an extra 9 points, starting at a 58 for the C+ and ending with a C- at 42. That might be the answer to life itself, but it only made us more confused. And it just gets messier as you plow down towards the F, which they laughably break down into an F+, F and F-.

When we began this piece, the main thing that bothered us about Gamerankings was the fact that you’d see scores from sites like

When we began this piece, the main thing that bothered us about Gamerankings was the fact that you’d see scores from sites like  Gamerankings must be in that last third, because they still haven’t noticed that the Gamespot review at the top of their

Gamerankings must be in that last third, because they still haven’t noticed that the Gamespot review at the top of their  Luckily, however, most of the industry doesn’t seem too infatuated with the numbers. We talked to several PR people, designers, and brand managers during our research for this piece, and by and large they use these sites the right way: as macro resources rather than accurate converters. Bethesda’s Pete Hines summed up their sentiments when he said, “We definitely use sites like Gamerankings and Metacritic to see how titles are doing overall because they're good resources for everything that's been said about our game.”

Luckily, however, most of the industry doesn’t seem too infatuated with the numbers. We talked to several PR people, designers, and brand managers during our research for this piece, and by and large they use these sites the right way: as macro resources rather than accurate converters. Bethesda’s Pete Hines summed up their sentiments when he said, “We definitely use sites like Gamerankings and Metacritic to see how titles are doing overall because they're good resources for everything that's been said about our game.”